Large Language Models have evolved rapidly especially with GPT-4. The number of instructions processed by GPT-4 is extremely large, spanning several orders of magnitude in terms of FLOPs during training and significant computational resources during inference. It is reasonable to assume that GPT-4 has more parameters, possibly in the range of 200 billion to 1 trillion parameters, based on trends in AI development. Many of the tasks can be done by GPT-4 and it is fast evolving in many fronts.

Turing Test

The Turing Test, proposed by British mathematician and computer scientist Alan Turing in 1950, is a measure of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. Turing introduced the test in his seminal paper “Computing Machinery and Intelligence,” where he asked the question, “Can machines think?” Instead of directly answering this, Turing proposed a practical test, which has since become known as the Turing Test.

Setup of Test

The classic Turing Test involves three participants: a human judge, a human respondent, and a machine designed to generate human-like responses. The judge interacts with both the human and the machine through a text-based interface (to prevent any bias based on physical appearance or voice). After a series of questions and answers, the judge must decide which participant is the machine and which is the human. If the judge cannot reliably distinguish between the human and the machine, the machine is said to have passed the Turing Test.

Need for Turing Test

Benchmark for Artificial Intelligence:

The Turing Test provides a practical benchmark for assessing the progress and capabilities of artificial intelligence (AI). It shifts the question from abstract philosophical debates to a concrete, observable interaction. Read Alan Turing’s original paper for more information.

Human-Machine Interaction:

The test evaluates how well a machine can replicate human conversational patterns and understanding, which is crucial for applications like virtual assistants, customer service bots, and interactive AI.

Understanding Intelligence:

By testing whether a machine can imitate human behavior convincingly, the Turing Test helps researchers explore the nature of intelligence, both artificial and human.

Ethical and Philosophical Implications:

The Turing Test raises important ethical and philosophical questions about the nature of consciousness, personhood, and the ethical treatment of intelligent machines.

Development of AI Technologies:

Passing the Turing Test requires advanced natural language processing, understanding, and generation capabilities, which drive the development of more sophisticated AI technologies as you can see in Journal of Artificial Intelligence Research.

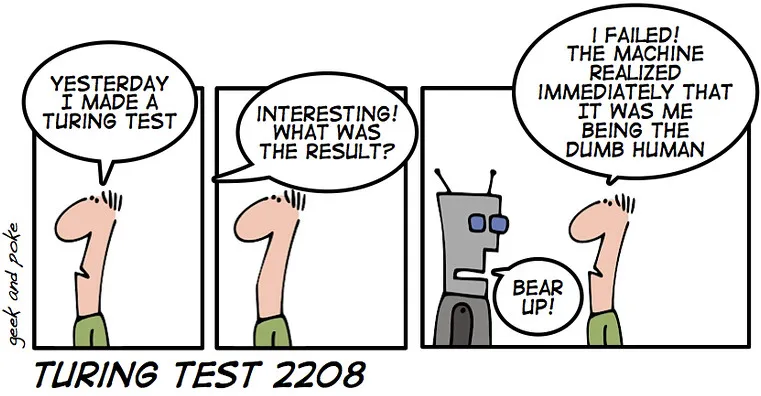

Criticisms and Alternatives

While the Turing Test is historically significant and still influential, it has faced criticisms and inspired alternative approaches. Critics argue that the Turing Test oversimplifies intelligence, focusing solely on linguistic capabilities rather than other forms of intelligence and understanding. The test is based on the machine’s ability to deceive the judge into thinking it is human, which may not necessarily correlate with true understanding or intelligence.

Various alternatives and extensions to the Turing Test have been proposed, such as the Winograd Schema Challenge and the Lovelace Test, which focus on different aspects of intelligence.

So the Turing Test remains a foundational concept in AI research, serving as both a practical benchmark and a philosophical exploration of machine intelligence. Its significance lies in its ability to concretely measure AI’s progress in emulating human-like behavior and understanding.

Does the GPT-4 pass the Turing test today? Proving a Turing Test today, especially with advanced models like ChatGPT-4, involves designing a rigorous experiment to see if an AI can convincingly mimic human behavior.

Understanding the Turing Test

The Turing Test, proposed by Alan Turing in 1950 as it was mentioned before, assesses whether a machine can exhibit intelligent behavior indistinguishable from that of a human. In its simplest form, a human judge interacts with both a machine and a human through a text-based interface. If the judge cannot reliably tell the machine from the human, the machine is said to have passed the test.

Setting Up the Turing Test with ChatGPT-4

Participants:

- Human Judge(s): Knowledgeable in various subjects but unaware of who (or what) they are interacting with.

- Human Interlocutors: Individuals who will also interact with the judge.

- ChatGPT-4: The AI being tested.

Interface:

- Use a text-based chat interface where the judge cannot see or hear the interlocutors.

Procedure:

- Blind Setup: Ensure the judge does not know which participant is the AI and which is human.

- Multiple Rounds: Conduct several rounds of conversations on a variety of topics (e.g., current events, abstract reasoning, personal anecdotes).

- Random Assignment: Randomly assign the AI and human interlocutors to different rounds to avoid patterns.

Duration and Interaction:

- Each conversation should last for a sufficient duration to allow the judge to ask diverse questions and probe the participants’ responses.

- The interactions should be natural and cover a wide range of topics to test the AI’s versatility.

Evaluation:

- After each round, the judge must decide which participant is the AI and which is the human.

- Collect data on the judge’s decisions across all rounds.

Criteria for Passing

Indistinguishability:

- If the judge is unable to reliably distinguish between the AI and human in more than 70% of the conversations, the AI can be considered to have passed the Turing Test.

Error Rate:

- Analyze the error rate of the judge’s decisions. A low error rate implies high distinguishability, while a high error rate indicates successful mimicry by the AI.

Challenges and Considerations

Variability in Judges:

- Different judges may have varying levels of scrutiny and skepticism. Including multiple judges can provide a more comprehensive assessment.

Complexity of Questions:

- The complexity and depth of the questions asked by the judge can significantly affect the outcome. Ensure a mix of straightforward and complex questions.

Human-Like Mistakes:

- To appear more human-like, the AI might need to make occasional mistakes or exhibit human-like quirks, which can be a challenging balance to achieve.

Ethical Considerations

Transparency:

- Participants should be fully aware of the nature of the test and their role in it.

Data Privacy:

- Ensure that any data collected during the test is anonymized and used solely for the purpose of the study.

Impact on Participants:

- Be mindful of the potential psychological impact on human participants, especially if the AI’s responses are indistinguishably human.

Recent Tests

Recent Turing tests conducted on GPT-4 have demonstrated noteworthy advancements in AI’s ability to mimic human-like conversation. In a study involving 500 participants, each conversing with respondents including a human, GPT-3.5, GPT-4, and the 1960s-era AI program ELIZA, participants judged GPT-4 to be human 54% of the time. This is a significant improvement over ELIZA, which was judged to be human 22% of the time, and on par with GPT-3.5, which scored 50%. The human participant was correctly identified 67% of the time【livescience.com】【Digital Classroom】.

In another experiment, conducted under the approval of UC San Diego’s IRB, 652 participants played 1,810 games with either another human or an AI, including different prompts for GPT-4. The results showed that the success rate (the rate at which the interrogator decided the witness was human) varied significantly based on the prompt used. The best GPT-4 prompt achieved a success rate of 41%, while human participants had a success rate of 63%【ar5iv】.

These findings highlight the nuanced and context-dependent nature of AI’s performance in Turing tests. While GPT-4 exhibits a high level of sophistication in generating human-like responses, its success can fluctuate based on the specific conversational scenario and prompt used. This variability suggests that while GPT-4 is a significant step forward, it still faces challenges in consistently passing as human in diverse contexts.

These studies underscore the progress in AI technology and its implications for future human-machine interactions. The ability of AI to mimic human conversation more convincingly raises important questions about the social and economic consequences of AI systems being perceived as human.

Conclusion

Conducting a Turing Test with ChatGPT-4 involves careful planning, execution, and analysis. By ensuring a fair and rigorous setup, you can effectively evaluate whether the AI can mimic human behavior to a degree that it becomes indistinguishable from actual human interactions. This test not only challenges the capabilities of modern AI but also provides insights into the evolving boundaries between human and machine intelligence.

References

Cameron R. Jones and Benjamin K. Bergen – Does GPT 4o pass the Turning Test?